Today’s post is just a short essay describing my experiences with Transkribus Lite and creating my first custom Transkribus model for handwritten text. You might remember from How to historical text recognition: A Transkribus Quickstart Guide that I usually only work with print and reuse the great Noscemus models. I had also refrained from training my own models in the past because a) I didn’t really need one and b) it seemed too complicated to just quickly play around with it. Both those things have changed in the meantime so I gave it a go with the Transkribus Lite web interface. Here are my experiences!

Act 1: Studierzimmer

Faust mit dem Pudel hereintretend. Eeeehrm, wait, wrong story. Late at night on my sofa is more like it.

Yay, I finally did it. Trained my first own model on Transkribus and, at the same time, used the opportunity to see a bit more of Transkribus Lite and I have to say: wow, the usability is really great. Totally loved it!

As you might remember from the post How to historical text recognition: A Transkribus Quickstart Guide, back then I still felt the need to write this “quickstart guide” because Transkribus already had cool features but I think that, like me, most users didn’t even need all those features. Still they made the whole interface chaotic and a bit hard to operate for all those users who didn’t need most of them.

Transkribus Lite totally responds to this need (that I assume lots of people, like me, must have) to primarily use Transkribus for simpler tasks but then still have the possibility to double back to the old software when you want to make use of its deeper configuration abilities.

Act 2. What I did

So how did I do this? I’m not going to give a tutorial this time because, honestly, I found the process so intuitive that the teaching material provided (like this Youtube video on how to train your own model or this post or this) sufficed to get started. Now my model is running and I’m excited to see how it performs.

As you might be aware from my last post, I am super lazy and I really don’t want to transcribe any more text than absolutely necessary 😉 (the ability to automate tedious tasks was definitely one of the main motivators for me initially going into the Digital Humanities). So obviously, I didn’t provide an enormous amount of training material. Depending on how well the model does, I will run it on my texts, do manual corrections and then train the model again if I have to. Although generally, I can’t wait for when the model is finally done so I don’t need to do anything by hand anymore 😀

Actually I wanted to give this project to a student but nobody chose it from the list of available projects (they seemed to have preferred using regex to automate the TEI encoding of alchemical dictionaries which admittedly is a fasincating task too, let’s see how they do).

However, putting all the transcription I already had into Transkribus (and adapting the transcription style a little bit to fit my needs) took me only around two-three hours. Thus, if the model comes out more or less useful, there isn’t that much left to do. So that might not have been big enough for a student project anyway.

Still, I’m very excited about this whole thing because a) alchemy and b) I have spent years thinking I’d have to pay 300€ for the digitization of the document I wanted. Then I asked again this year and some funding stuff had changed for the library, so they digitized it for free.

Of course, I immediately had to embark on this adventure of trying to generate a transcription of my text and see how well that process of training your own Transkribus model actually goes. So basically I’m following this hunch, I’m not sure if I should call it “curiosity” or “procrastination”.

Anyway, I shouldn’t be blogging while I wait for my model to be trained because that might take a while and I’ll end up spamming you with lots of irrelevant information. So with that,

Ninja out for now 😉

Act 3. The Aftermath

The next morning. Studierzimmer, mit Kaffee hereintretend.

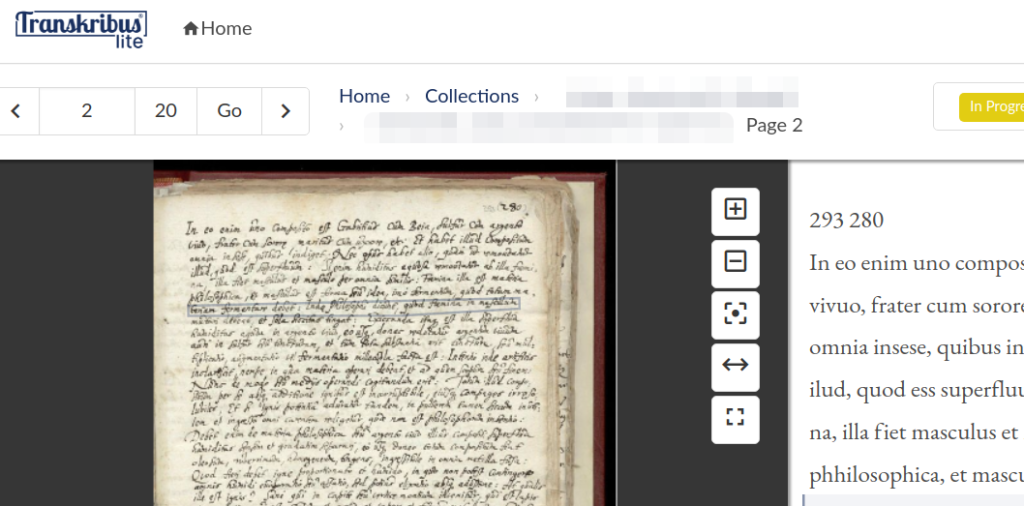

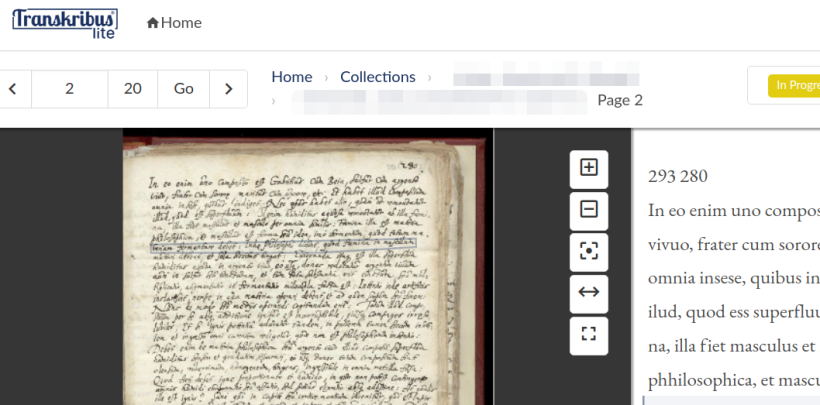

So I ended up spending around three hours last night putting the 17 pages of existing manual transcription (by a collaborator/colleage of mine) into Transkribus, evening out some inconsistencies and such. The model was trained pretty quickly (maybe the servers weren’t that busy at this odd time and also, the training set really wasn’t that big).

I chose the automatic validation on 10% of the test set on which it performed reallly well (model was very proud of itself but I guess quite a bit of overfitting being trained and tested on such a limited base of data). The resulting model actually came back last night, so I ran it on two more texts I also had from the same author. One is another short letter tract looking very similar and digitized by the same library, the other one is the reason why I wanted to train this model in the first place. It’s 130 leaves, i.e. 260 pages, and this manuscript autograph is rather messier than the aforementioned letters. I assume that this one was intended for the personal use of the author rather than sending to a potential future patron (like the texts the model was trained on).

Thus, the handwriting is quite a bit uglier, the ink is more faded on many of the leaves and there are tons of crossing-outs. Accordingly, the transcription wasn’t super bad but I will definitely use this new base of data to quickly proof-read and correct some pages in both the second letter tract as well as the messy manuscript. That way, I’m hoping the model will get better and I can use it on the MS again, providing a better result. Thankfully I have translated this author quite a bit so I kind of know what he tends to be talking about. That makes proofreading much quicker because most times, I already know what he wanted to say from the context of the Latin sentences, even without actively scrutinizing the handwriting. Know your sources!

Anyway, I’m super motivated right now to get this model up to speed and then also share it for others to reuse. However, I’m not sure there are actually any other MS in the hand of this author extant, so it might not be of that much use. Still, I’m secretly hoping we might find more of them hidden in some archive in the future!

Outro

So that was my live recounting of the events around training my first own Transkribus model. Now that the adrenaline has faded, I have realized that I will, in fact, be needing to do quite a bit more proofreading to improve the model. For which, if were being honest, I actually don’t currently have the time. Maybe should have made it a student project after all 😉 If you’re a student who is interested in such things and you’re reading this not too long after posting (say sometime before 2024), chances are that I could still use your help if you want some experience using Transkribus and transcribing (relatively pretty) 17c handwriting.

Anyway, it was still a fun experience and I can recommend both training your own Transkribus models should the need arise as well as use the Transkribus Lite web editor. It got really intuitive, so kudos to the Transkribus team!

That’s it for today,

the Ninja

Buy me coffee!

If my content has helped you, donate 3€ to buy me coffee. Thanks a lot, I appreciate it!

€3.00

2 thoughts on “Training my own Handwritten Text Recognition (HTR) model on Transkribus Lite”